A coordinated offering makes resources available across a network, enabling users to access and utilize specific capabilities without needing to manage the underlying infrastructure. Consider, for example, the provision of computing power to various users within an organization, managed and scaled centrally, rather than requiring each user to maintain their own independent systems. This methodology streamlines operations and optimizes resource allocation.

The advantages of this type of offering include enhanced efficiency, cost reduction through economies of scale, and improved reliability due to centralized management and monitoring. Historically, the evolution of centralized computing led to the development of these service-oriented architectures. Such setups reduce capital expenditures by allowing shared equipment and lower operating expenditures through optimized management by dedicated teams.

This exposition provides a foundation for understanding more detailed topics such as implementation strategies, security considerations, and various applications across different industries.

1. Resource Availability

Resource availability constitutes a cornerstone of coordinated provision, directly impacting its effectiveness and overall value proposition. Consistent and reliable access to resources is paramount for fulfilling the intended purpose and ensuring user satisfaction.

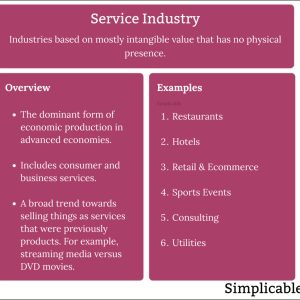

Suggested read: Comprehensive Guide to the Service Industry Definition

-

Uptime Guarantee

Uptime guarantee refers to the committed percentage of time that a resource is operational and accessible. A high uptime guarantee directly translates to reliable service. Failure to maintain stipulated uptime levels degrades performance and erodes user trust. For example, service level agreements (SLAs) commonly specify uptime guarantees, often expressed as “99.99% uptime,” reflecting a commitment to minimal downtime and thus high availability.

-

Capacity Planning

Capacity planning involves proactively assessing and managing the available resources to meet anticipated demand. Inadequate capacity planning results in performance bottlenecks, service interruptions, and user dissatisfaction. Proper capacity planning ensures adequate processing power, storage capacity, and network bandwidth are available to support the needs of users. This requires continuous monitoring of usage patterns and anticipating future requirements.

-

Redundancy and Failover

Redundancy and failover mechanisms provide alternative resources in the event of a primary resource failure. Redundancy involves replicating critical components and data to ensure continuity of operations. Failover refers to the automated switching from a failed resource to a backup resource. These mechanisms minimize service disruptions and ensure that resources remain available even in the face of unforeseen events, increasing the overall resilience of coordinated access provision.

-

Geographic Distribution

Geographic distribution of resources increases availability by mitigating the impact of regional outages or disasters. Distributing resources across multiple geographic locations enhances the resilience of the service and reduces latency for users in different regions. Geographic distribution also supports compliance with data residency regulations. By strategically locating resources, service providers can ensure availability while meeting regional regulatory requirements.

The facets of uptime guarantee, capacity planning, redundancy/failover, and geographic distribution all contribute to overall resource availability, which is a determining factor in the success of any coordinated provision model. A service lacking in one or more of these areas will likely experience performance issues and reliability problems, ultimately diminishing its value to the end-user.

2. Network Accessibility

Network accessibility serves as a fundamental enabler for coordinated resource provision. Without effective network accessibility, the benefits of centralized management and optimized resource allocation are severely diminished. The ability for users to readily connect to and utilize distributed resources directly determines the practical value of the offering. Consider a global content delivery network; its effectiveness hinges on users’ ability to quickly and reliably access content from geographically dispersed servers. In this context, poor network accessibility translates to slow load times and a degraded user experience, negating the advantages of content distribution.

Furthermore, the type and quality of network access profoundly impact the viability of coordinated resources. Wide area networks (WANs) connect geographically remote locations. Local area networks (LANs) connect resources within limited location. Cloud environments connect resources through virtual networking. Network access considerations include bandwidth, latency, security, and reliability. For example, applications requiring high throughput and low latency, such as real-time data analytics, demand network infrastructure capable of meeting stringent performance requirements. Security protocols and firewalls play a critical role in protecting distributed resources from unauthorized access, underscoring the multifaceted nature of network accessibility in these types of services.

In summary, the efficacy of coordinated resource arrangement is intrinsically linked to the underlying network infrastructure. Network accessibility dictates resource availability, impacts performance, and influences security. Understanding this relationship allows for better design, implementation, and management of these types of arrangements. Challenges such as network congestion, security threats, and geographic limitations must be addressed to realize the full potential of coordinated arrangements. Network considerations are critical in the architecture of coordinated solutions, which helps to ensure that the benefits of distribution are not undermined by network bottlenecks or vulnerabilities.

Suggested read: Instant, Accurate Service Quotes - Get Your Project Started Today!

3. Centralized Management

Centralized management functions as the linchpin of effective coordinated resource arrangements. Without a unified control plane, the inherent complexities of distribution become unmanageable, leading to inefficiencies, inconsistencies, and security vulnerabilities. The cause-and-effect relationship is evident: distributed resources, by their nature, operate in disparate environments; effective operation necessitates centralized control. Centralized management provides the necessary oversight and coordination to mitigate these challenges. Consider a multinational corporation utilizing a coordinated computing infrastructure. Centralized management enables IT administrators to uniformly enforce security policies across all global locations, ensuring that all systems adhere to the same standards. The absence of this centralized oversight would inevitably lead to security breaches, data inconsistencies, and operational chaos, highlighting the critical importance of centralized management as an essential component of coordinated distribution.

The practical significance of centralized management extends beyond security and compliance. It encompasses resource allocation, performance monitoring, and problem resolution. For example, consider a cloud-based content delivery network. Centralized management empowers administrators to dynamically allocate bandwidth to different geographic regions based on real-time demand. This dynamic resource allocation maximizes performance for end-users while optimizing resource utilization across the entire network. Furthermore, centralized monitoring provides administrators with a comprehensive view of system performance, enabling them to proactively identify and resolve issues before they impact users. This proactivity is instrumental in maintaining high availability and ensuring a positive user experience. An illustration of this can be found in the financial services sector. Banks may employ a centralized system to manage data storage across multiple servers.

In conclusion, centralized management constitutes an indispensable aspect of effective coordinated access. It provides the essential control and coordination necessary to overcome the challenges inherent in distributed systems. While the implementation of centralized management can present technical and organizational challenges, the benefits derived in terms of security, efficiency, and manageability far outweigh the costs. Ultimately, a well-designed and effectively implemented centralized management system is the key to unlocking the full potential of distributed resources and achieving the desired outcomes.

4. Scalable Architecture

Scalable architecture forms a critical foundation for effective distribution, enabling it to adapt to changing demands without compromising performance or availability. A well-designed, scalable architecture ensures that a distributed service can accommodate increased user traffic, data volume, and functional complexity, all while maintaining acceptable response times. The cause-and-effect relationship is clear: increased demand necessitates increased capacity, which is only achievable through a scalable architectural design. For example, consider a video streaming platform; as the number of subscribers increases, the underlying infrastructure must scale to handle the additional bandwidth and processing requirements. Without a scalable architecture, the platform would suffer from buffering issues, reduced video quality, and potential service outages. Scalable architectures are designed to enhance capacity through mechanisms such as load balancing, horizontal scaling, and dynamic resource allocation.

The importance of scalable architecture as a core component of distributed arrangements becomes apparent when considering real-world applications. Cloud computing, for instance, relies heavily on scalable architectures to provide on-demand resources to users. The ability to scale computing power, storage, and network bandwidth in response to fluctuating workloads is a fundamental characteristic of cloud services. This scalability enables businesses to avoid over-provisioning resources, thereby reducing costs and improving efficiency. Similarly, social media platforms rely on scalable databases and distributed processing systems to handle massive amounts of user-generated content. A scalable architecture enables these platforms to accommodate rapid growth in user base and content volume without experiencing performance degradation. The practical significance of understanding the role of scalable architecture lies in its impact on the overall effectiveness and cost-efficiency of distributed deployments. Organizations can optimize resource utilization, reduce operational expenses, and improve the user experience by incorporating scalability into the design of distributed solutions.

In conclusion, scalable architecture is indispensable for any successful distribution model. A scalable architecture must be integrated into the initial design phase. This provides a framework for the system to evolve and adapt to changing demands without requiring complete overhauls. The ability to scale seamlessly is not merely a desirable feature but a fundamental requirement for ensuring the long-term viability and success of distribution. As distributed environments become increasingly complex and interconnected, the importance of scalable architecture will only continue to grow.

5. Optimized Allocation

Optimized allocation represents a critical dimension of effective resource management within a distributed service context. Without strategic distribution of resources, the potential benefits of distribution, such as scalability and redundancy, are significantly diminished. The correlation between optimized allocation and overall performance is clear: resources must be strategically deployed to maximize efficiency and minimize latency. For example, in a content delivery network, optimized allocation involves directing user requests to the server closest to the user geographically, thereby reducing latency and improving download speeds. This strategic placement of resources directly translates to a better user experience and more efficient utilization of network infrastructure. This proactive method enhances the service by reducing strain on individual components.

Furthermore, optimized allocation plays a crucial role in cost management and resource utilization. By dynamically adjusting resource allocation based on real-time demand, distributed environments can avoid over-provisioning, which wastes resources, and under-provisioning, which degrades performance. Consider a cloud computing platform. These platforms use optimized allocation to dynamically scale computing resources to meet fluctuating demands, enabling users to pay only for the resources they actually consume. The practical significance of this understanding lies in its ability to translate directly into cost savings and improved resource efficiency. Furthermore, optimizing for efficiency leads to a reduced ecological footprint, benefiting all stakeholders.

In conclusion, optimized allocation is an indispensable element of a well-functioning distributed service model. It requires a comprehensive understanding of workload patterns, resource capacities, and network topologies. The successful implementation of optimized allocation strategies is essential for unlocking the full potential of distributed environments and achieving the desired outcomes of high performance, low latency, and efficient resource utilization. Effective design of this service should improve key performance indicators across various applications. The continued evolution of optimized allocation techniques will likely drive further advancements in the performance and efficiency of distribution in the future.

6. Efficient Delivery

Efficient delivery constitutes a critical performance parameter of any distributed service. The rapid and reliable transfer of data and resources from source to destination directly impacts the user experience and the overall effectiveness of the service. The cause-and-effect relationship is clear: suboptimal delivery mechanisms introduce latency, reduce throughput, and degrade the perceived value of the arrangement. For instance, a distributed software update mechanism reliant on outdated protocols would likely result in prolonged installation times and increased network congestion, diminishing the utility of the service. Efficient delivery encompasses a multifaceted approach to data transmission, including optimized routing, caching strategies, and content compression.

The practical significance of efficient delivery becomes particularly apparent in high-demand scenarios, such as streaming video or large file transfers. Content delivery networks (CDNs), for example, are predicated on the principle of efficient delivery. By strategically caching content at edge servers closer to end-users, CDNs significantly reduce latency and improve download speeds. The absence of such efficient delivery mechanisms would render these services impractical for many users. Consider the implications for scientific research, where large datasets must be transferred across geographically dispersed locations. The time required to transfer these datasets can have a profound impact on the pace of discovery. Efficient delivery is crucial for unlocking the full potential of distributed resources, enabling them to be accessed and utilized effectively, irrespective of location.

Suggested read: User-Friendly Service Project Ideas for the Service-Minded

In conclusion, efficient delivery forms an integral component of a robust and effective distributed service. It is not merely a desirable feature but rather a necessity for ensuring that users can access and utilize distributed resources in a timely and reliable manner. The challenges associated with achieving efficient delivery are multifaceted, encompassing network optimization, data compression, and caching strategies. As distributed environments become increasingly complex, the importance of efficient delivery will only continue to grow, demanding further advancements in network technologies and data transmission protocols.

Frequently Asked Questions Regarding Distri Service

The following addresses common inquiries related to the coordinated provision of resources across a network. The aim is to provide clarity and insight into the core principles and operational aspects of this method.

Question 1: What distinguishes a well-architected “distri service” from a basic network setup?

A fundamental difference lies in the level of abstraction and management. Basic network setups primarily focus on connectivity, whereas a coordinated provision model provides a higher-level abstraction, managing resources and their allocation centrally. Furthermore, a well-architected instance includes service-level agreements (SLAs), performance monitoring, and automated scaling capabilities not typically found in basic network arrangements.

Question 2: What are the primary cost considerations associated with implementing a “distri service”?

Cost considerations encompass initial setup expenditures, ongoing operational expenses, and potential scalability investments. Setup expenditures include hardware, software, and integration costs. Operational expenses involve maintenance, monitoring, and support personnel. Scalability investments factor in anticipated growth in resource demands and the associated infrastructure upgrades.

Question 3: How does a coordinated provision approach enhance security compared to traditional decentralized systems?

Centralized management allows for consistent security policy enforcement across all distributed resources. This reduces the attack surface and simplifies the process of implementing security updates and patches. Traditional decentralized systems, conversely, often suffer from inconsistent security practices and fragmented management, making them more vulnerable to attacks.

Question 4: What are the critical performance indicators (KPIs) for evaluating the effectiveness of a “distri service”?

Key performance indicators include uptime, latency, throughput, and resource utilization. Uptime measures the availability of the service. Latency reflects the responsiveness of the system. Throughput indicates the volume of data transferred. Resource utilization assesses how efficiently the available resources are being used.

Question 5: How does the choice of network topology impact the performance and reliability of a “distri service”?

Suggested read: Ultimate Guide to Service Marks: Protecting Your Brand Identity

Network topology plays a crucial role in determining the efficiency and resilience of a distributed offering. Star topologies offer centralized management but are vulnerable to single points of failure. Mesh topologies provide high redundancy but are more complex to manage. The optimal topology depends on the specific requirements of the application and the criticality of its resources.

Question 6: What measures can be taken to mitigate the risks associated with vendor lock-in when utilizing a “distri service” from a third-party provider?

To mitigate vendor lock-in, organizations should adopt open standards, employ multi-cloud strategies, and ensure data portability. Open standards facilitate interoperability with different platforms. Multi-cloud strategies allow for workload distribution across multiple providers. Data portability ensures that data can be migrated easily from one provider to another.

In summary, a comprehensive understanding of architecture, costs, security, performance, topology, and vendor risks is essential for effectively leveraging a coordinated approach.

The subsequent section explores practical examples of coordinated provision applications across various industries.

“Distri Service” Implementation and Optimization Tips

Implementing and optimizing involves strategic planning and diligent execution. The following recommendations facilitate effective implementation and maximize return on investment.

Tip 1: Define Clear Service-Level Agreements (SLAs). Establish concrete and measurable expectations regarding uptime, performance, and support response times. SLAs ensure accountability and provide a framework for performance monitoring and issue resolution. For example, specify a minimum uptime of 99.99% with a guaranteed response time of 1 hour for critical incidents.

Tip 2: Prioritize Security from the Outset. Incorporate security considerations into every stage of the implementation process, from architectural design to operational procedures. Implement robust authentication mechanisms, encryption protocols, and intrusion detection systems. Regularly conduct security audits and penetration testing to identify and address vulnerabilities.

Tip 3: Implement Comprehensive Monitoring and Logging. Establish a system for continuously monitoring performance metrics and logging all relevant events. This enables proactive identification and resolution of issues, as well as facilitates performance optimization and capacity planning. Collect metrics such as CPU utilization, memory consumption, network bandwidth, and error rates.

Tip 4: Automate Routine Tasks. Leverage automation tools to streamline routine tasks such as provisioning, configuration management, and software deployment. This reduces manual effort, minimizes errors, and improves overall efficiency. For example, use infrastructure-as-code tools to automate the deployment and configuration of resources.

Tip 5: Optimize Network Topology. Design the network topology to minimize latency and maximize throughput. Consider factors such as geographic distribution of users, bandwidth requirements, and network congestion. Utilize techniques such as content caching, load balancing, and quality of service (QoS) to optimize network performance.

Tip 6: Implement a Scalable Architecture. Design the service with scalability in mind, ensuring that it can accommodate future growth in user demand and data volume. Utilize technologies such as containerization, microservices, and cloud computing to enable horizontal scaling and dynamic resource allocation.

Suggested read: The Essential Guide to Finding a Top-Notch Service Professor

Tip 7: Conduct Regular Performance Tuning. Continuously monitor performance metrics and identify opportunities for optimization. Regularly tune system parameters, database configurations, and application code to improve performance and resource utilization. For example, adjust database indexing strategies or optimize query performance.

Adherence to these best practices facilitates a more efficient, secure, and scalable service deployment.

The following section will provide concluding remarks on the role and future of distributed service models.

Conclusion

The preceding analysis has comprehensively examined the characteristics, implementation strategies, and optimization techniques associated with coordinated access provision. Key considerations include network accessibility, centralized management, scalable architecture, optimized allocation, and efficient delivery. A thorough comprehension of these elements is essential for successful deployment and operation.

Effective utilization of the coordinated provision model requires continuous vigilance and adaptation to evolving technological landscapes. Organizations must prioritize security, performance, and scalability to derive maximum benefit from distributed resources. The strategic application of these principles will undoubtedly shape the future of efficient resource management. Further research and development in this area are warranted to address emerging challenges and unlock new possibilities.